Real-time Extended Reality (XR) simulations combine the physical scale and immersive presence of Virtual Reality (VR) with physics engines, camera and sensor representations, with custom software to create virtual systems such as manufacturing lines and unit operations. Typically manufacturing process development and troubleshooting are done with prototype systems. Although component layouts are created with computer-aided design (CAD) tools, little is known about the actual system functionality until a physical prototype is made and tested. Design iterations are time-consuming and expensive. The advantages of using a real-time XR simulation versus physical system development are significantly faster development time and much lower cost.

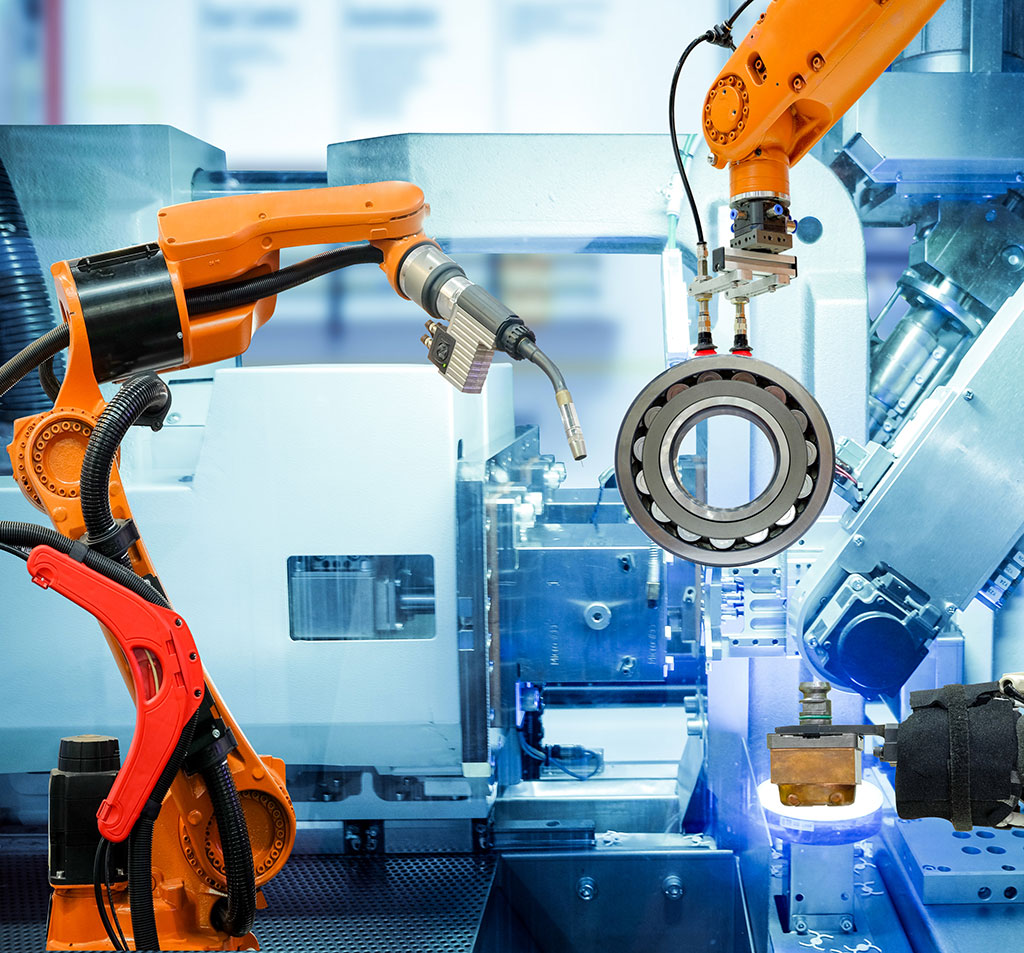

Kinetic Vision's XR simulations, or "digital twins", not only accurately represent the system's layout and motion, but also the software control systems. Specialized components such as industrial robots typically come with control and training software, and that software is utilized to integrate the robot into the overall real-time simulation. Virtual robots and other operations are tested within the simulation. This approach streamlines custom control software development as the code is designed, tested and debugged within the virtual environment before being deployed on the physical counterpart.

Real-time simulation dramatically speeds up the process development time and also improves communication and collaboration between the other functional product teams. The XR environment is concurrently deployed to all groups, which enables training, safety and maintenance personnel to easily visualize and understand all aspects of the system, even if located thousands of miles away. System IoT monitoring sensors and devices are linked together both in physical and virtual space, allowing for remote troubleshooting and process monitoring.

Learn more about real-time simulation at Wikipedia.

User-Driven Pick-and-Place Robot

In this simplified demonstration of a real-time simulation a pick-and-place robot is trained and tested in an XR environment. Gripper motion is user-controlled and inverse kinematics is utilized to simultaneously update the robot configuration.

Synthetic Training Data for Machine Learning Systems

XR simulations are also useful for creating synthetic training data for machine learning systems. In this example the motion data from a user-driven system was utilized for imitation and reinforcement learning of virtual robots using TensorFlow. The video below shows the robot behavior midway through the training process. Multiple system instances are used to speed up the training with the best-performing robot selected after each learning cycle.